11.1

Cerb (11.1) is a feature upgrade released on April 24, 2025. It includes more than 41 new features and improvements from community feedback.

To check if you qualify for this release as a free update, view Setup » Configure » License. If your software updates expire on or after March 31, 2025 then you can upgrade without renewing your license. Cerb Cloud subscribers will be upgraded automatically.

Important Release Notes

-

Cerb 11.1 requires PHP 8.2+ and MySQL 8.0+ (or MariaDB 10.5+).

-

To upgrade your installation, follow these instructions.

Added

-

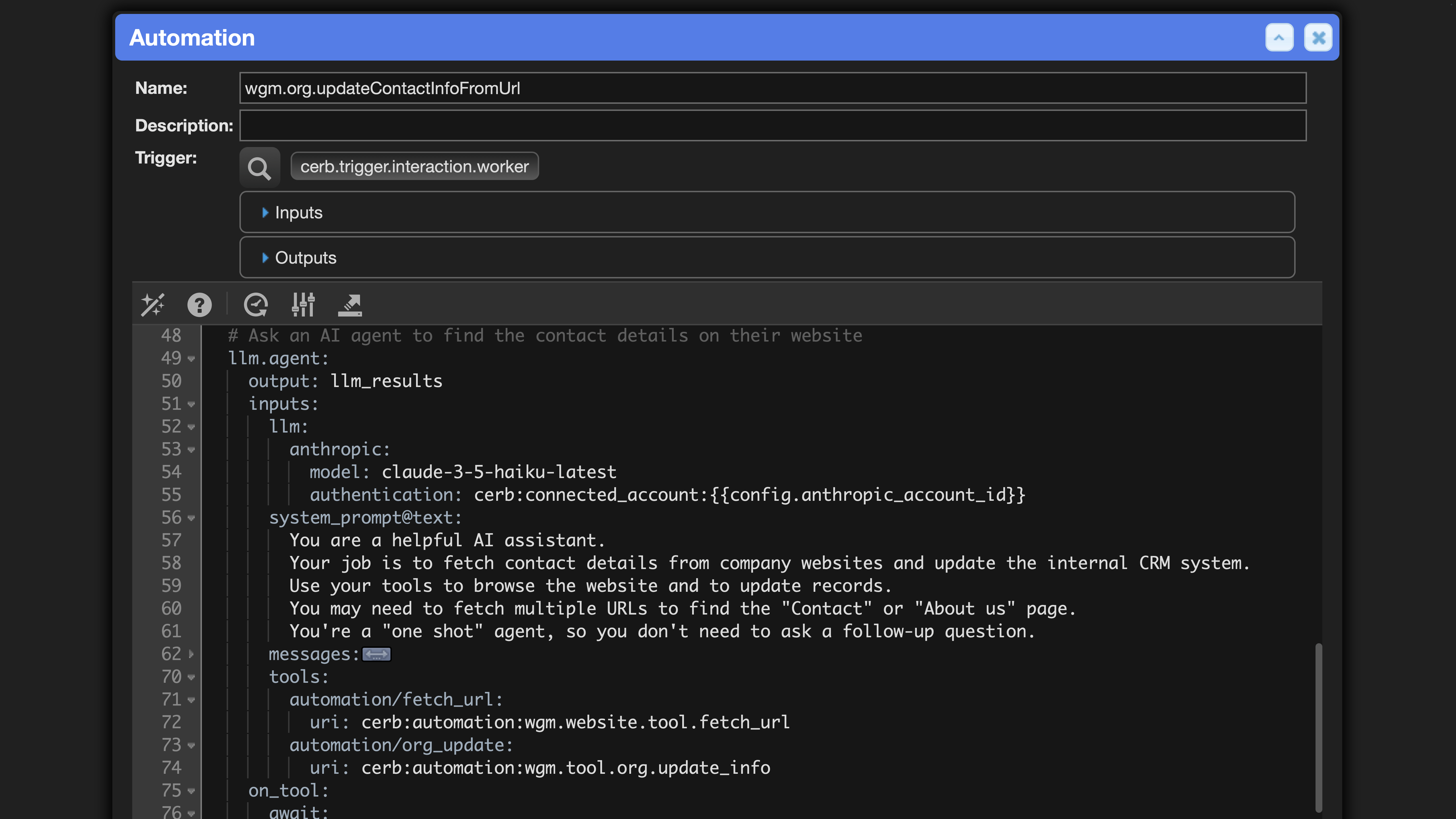

[Automations/LLM] In automations, added an llm.agent: command to simplify integrations with generative large language models (LLMs). The agent automatically manages history and tool use. Tools can be created using

llm.toolautomations with automatic documentation based oninputs:. Thellm.agent:command will automatically invoke tools and return their output to the model. Integration is included for Anthropic, AWS Bedrock, OpenAI, Groq, Ollama, and Together.ai.

-

[Automations/LLM] In the llm.agent: automation command, non-automation

tools:can be defined as typetool:with a description and parameters. When these tools are used by a model, theon_tool:event is triggered with custom logic and a__toolplaceholder object with keysid,name, andparameters. A result must be returned using thetool.return:command. For example, this can useawait:interaction:to delegate to any interaction, or to implement "human in the loop" manual approval workflows. -

[Automations/LLM] In automations, the llm.agent: command now returns a

session_idto its output key. This can be used with thellmTranscriptform element to render the chat history. -

[Automations/LLM] In automations, refactored the llm.agent: command so all tool use (including automations) executes the

on_tool:branch. The same__toolplaceholders are available as for custom tools. This allows real-time updates to be returned to the user during tool use; resetting the 30-second HTTP request time limit. Long-running deep research is now possible. -

[Automations/LLM] Added an llm.tool automation trigger. A collection of these tools can be provided to an

llm.agent:using a large language model (LLM) that supports function calling (tool use). The inputs of anllm.toolautomation are used to automatically generate the JSON Schema expected by common models. For instance, tools can provide access to Cerb data or integrate with third party APIs. -

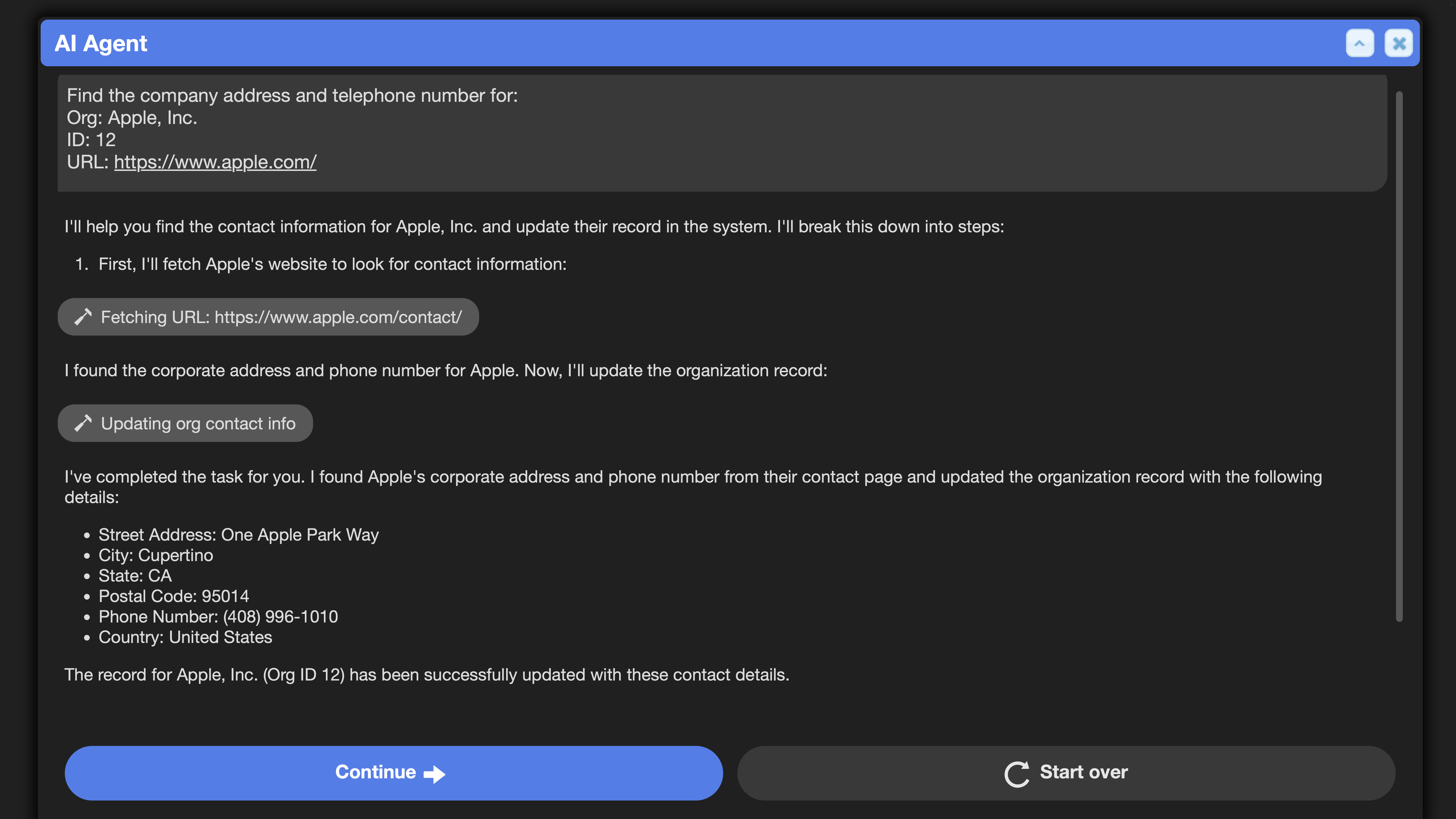

[Interactions/Worker] In interaction.worker automations, added a new llmTranscript: form element. In two lines of code, this automatically handles rendering a chat history from an LLM session ID, with copy-to-clipboard, thumbs up/down ratings, etc. Previously, this had to be tediously implemented using a

sheetelement.

-

[Interactions/Worker] In interaction.worker automations, the llmTranscript: form element allows custom labels per tool. For instance, rather than

Tool: docs_searchthe response can be "Searching documentation using 'query'". Tool use callouts are visually distinct from chat responses. -

[Interactions/Website] In interaction.website automations, added a new llmTranscript: form element. In two lines of code, this automatically handles rendering a chat history from an LLM session ID, with copy-to-clipboard, thumbs up/down ratings, etc. Previously, this had to be tediously implemented using a

sheetelement. -

[Interactions/Website] In interaction.website automations, the llmTranscript: form element allows custom labels per tool. For instance, rather than

Tool: docs_searchthe response can be "Searching documentation using 'query'". Tool use callouts are visually distinct from chat responses. -

[Devblocks/Platform/LLM] Added an LLM service to the Devblocks platform. This interfaces with Large Language Model APIs for generative text (e.g. Q&A, summarization, classification, report generation, email drafts).

-

[Platform/LLM/Ollama] Added an

ollamaprovider to the LLM service. This can connect to locally hosted open-source large language models through the Ollama API. -

[Platform/LLM/OpenAI] Added an

openaiprovider to the LLM service. This can connect to hosted large language models through the OpenAI API (e.g.gpt-4o). A customizableapi_endpoint_urlalso allows the use of other compatible services. -

[Platform/LLM/OpenAI] In the llm.agent: automation command,

llm:openai:api_endpoint_url:now includes an endpoint example for Docker Model Runner. -

[Platform/LLM/Groq] Added a

groqprovider to the LLM service. This connects to fast, hosted, open-source large language models through the Groq API. -

[Platform/LLM/Anthropic] Added an

anthropicprovider to the LLM service. This connects to hosted large language models through the Anthropic API (e.g.claude-3-5-sonnet-20241022). -

[Platform/LLM/Bedrock] Added an

aws_bedrockprovider to the LLM service. This connects to hosted large language models through the Amazon Bedrock API (e.g.anthropic.claude-3-haiku-20240307-v1:0). This is ideal when hosting Cerb within AWS services since inference remains private within the same region. -

[Platform/LLM/HuggingFace] Added a

huggingfaceprovider to the LLM service. This uses the Serverless Inference API by default, but Inference Endpoints are also supported. -

[Platform/LLM/TogetherAI] Added a

togetherprovider to the LLM service. This uses the together.ai inference cloud service. -

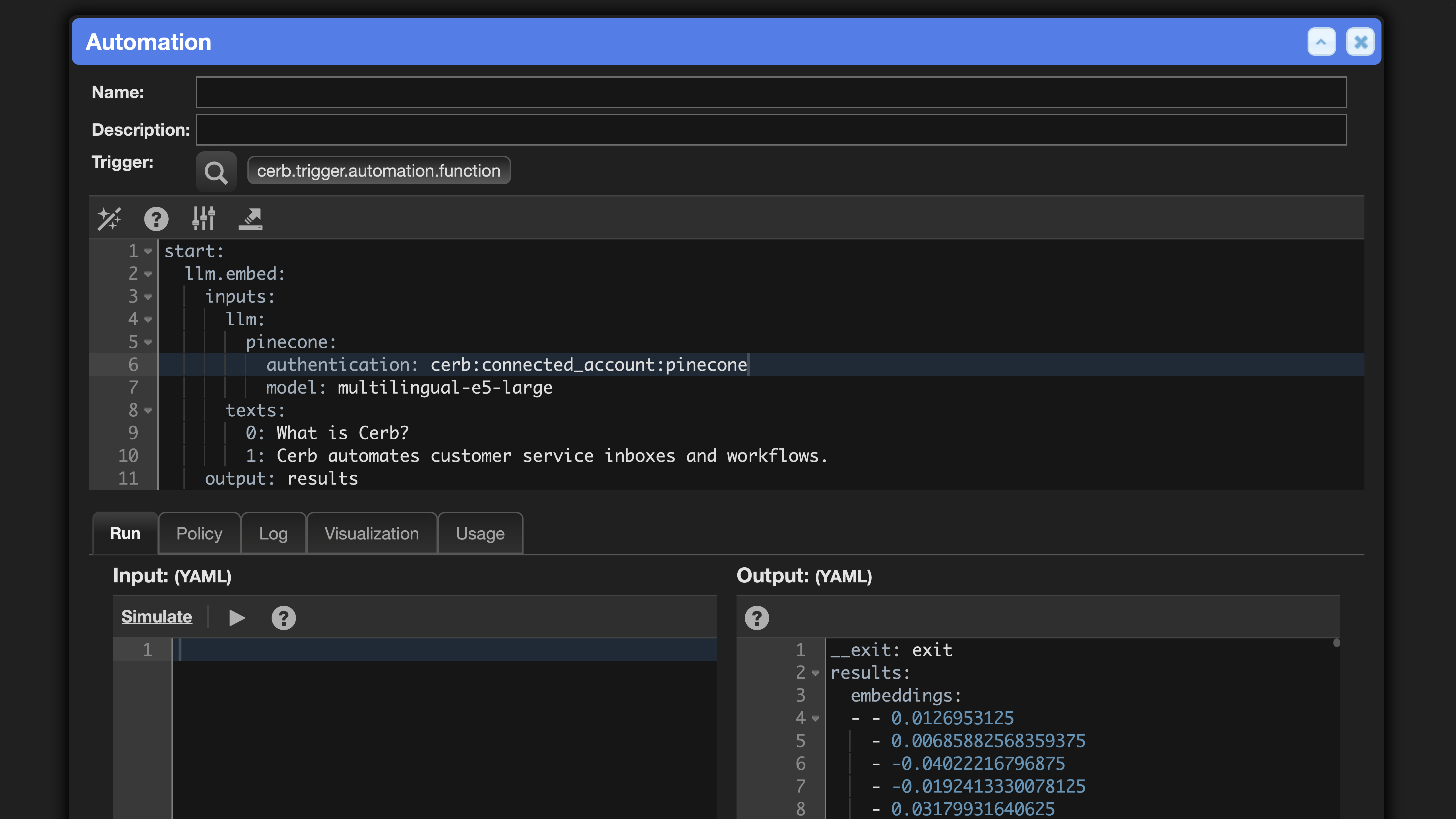

[Platform/LLM/Pinecone] Added a

pineconeprovider to the LLM service. This uses the Pinecone text embedding API. -

[Platform/LLM/VoyageAI] Added a

voyageprovider to the LLM service. This uses the Voyage AI text embedding API. -

[Automations/LLM] Added an llm.embed: command to automations. This uses LLM providers that support the 'Embedding' interface to produce vector embeddings for blocks of text (e.g. OpenAI, Ollama, Hugging Face). For instance, embedding knowledgebase articles and FAQs for semantic search.

-

[Automations/Inputs] In automations, each input can now set an optional

description:key. This is used for documentation, autocompletion, and JSON Schema in LLM tool use. -

[Automations/Inputs] In automations,

text:inputs can now set an optionalallowed_values:key to create picklists. This is used for documentation, autocompletion, and JSON Schema in LLM tool use. -

[Devblocks/Platform/LLM] Added a 'DatabaseHistory' memory module to the LLM service for managing large language model chat history + context.

-

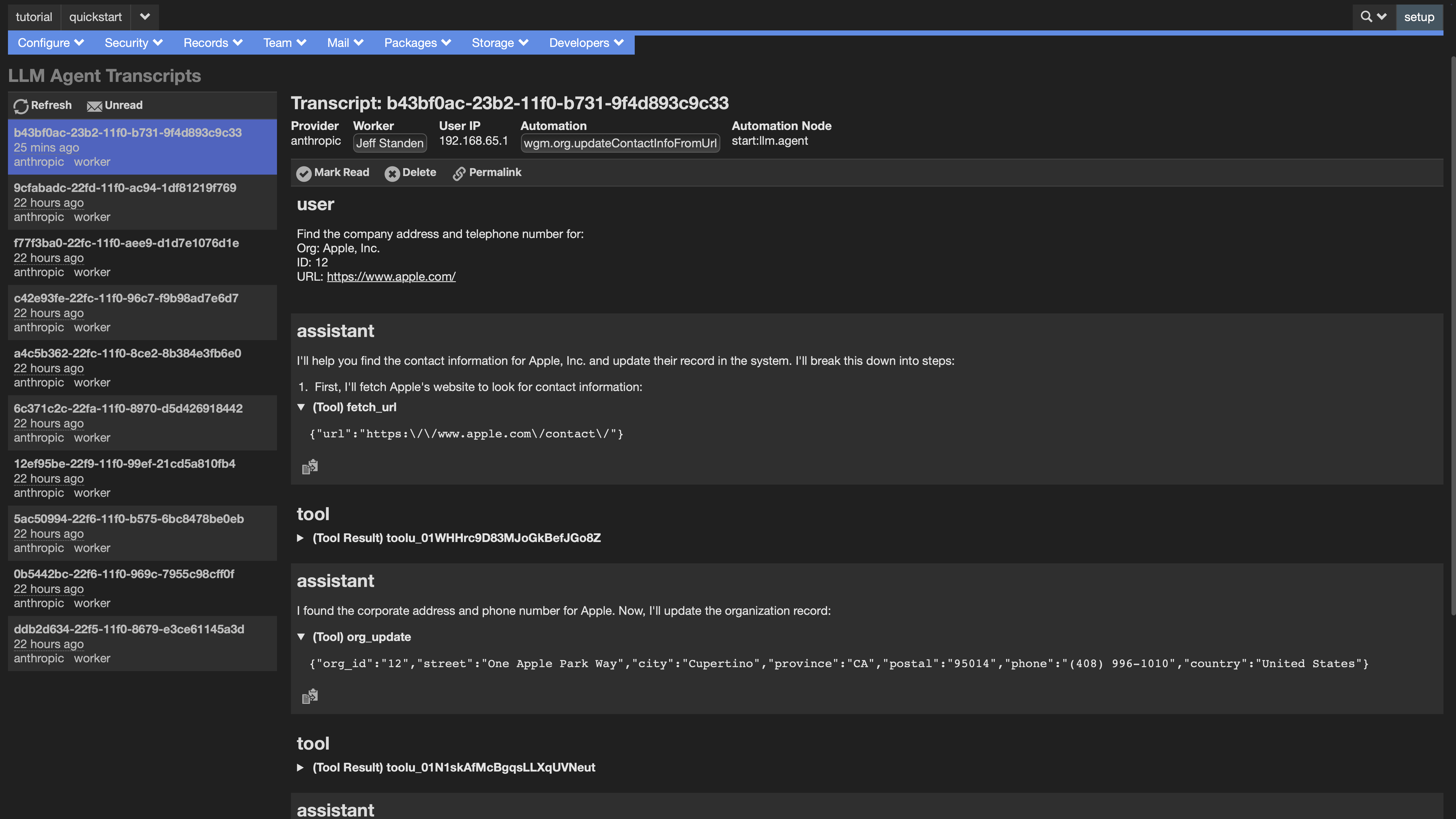

[Automations/LLM] In automations, llm.agent: transcripts are now viewable in Setup » Developers » LLM Agent Transcripts. Transcripts can be marked read or deleted. When an agent uses a tool, its parameters and results are shown in the transcript. This makes debugging and compliance much easier. It also provides valuable feedback for improving agents based on past user interactions.

-

[Automations/LLM] In LLM Transcripts, a 'Permalink' button provides a direct link for sharing transcripts.

-

[Automations/LLM] When viewing LLM agent chat transcripts, metadata is displayed at the top (e.g. provider, user, client IP, automation, and automation node).

-

[Automations/LLM] When viewing LLM agent chat transcripts, added a 'Copy' button to copy the plaintext (Markdown) content from a message to the browser clipboard.

-

[Interactions/Website] In interaction.website automations, submit: form elements can now specify the width

size:of each button: whole, half, third, and quarter. -

[Interactions/Website] In interaction.website automations, say: form elements can now specify several

styles:options: text-center, text-large, text-left, text-right, or text-small. -

[Worklists/Search/Performance] Improved the performance of many complex search queries by running some subqueries independently and merging by IDs. This is enabled by default and can be disabled from the

APP_OPT_SQL_SUBQUERY_TO_IDSconfiguration option. -

[Interactions/Website] In website interactions, the submit: form element has a new

is_automatic@bool:option. Whentrue, the form is automatically submitted after it is rendered. This is particularly useful before a time-intensive operation like LLM text generation, which will instantly transition to a waiting spinner. -

[Interactions/Worker] In worker interactions, the submit: form element has a new

is_automatic@bool:option. This is now preferred toawait:duration:since it can render other form elements during the wait (e.g. say, sheet, LLM transcript).

Changed

-

[Portals/Interactions] In 'Website Interaction' portals and on external websites, interactions now start in 'full' mode and use a blur overlay on the underlying website. The previous 'popup' default style was difficult to see on some website themes.

-

[Worklists/Fieldsets] In worklists, improved the performance of the

fieldset:filter. -

[Interactions/Website] In interaction.website automations, the sheet: form element now applies styling to code blocks in

markdowncolumns. -

[Setup/Plugins] In the setup page, moved 'Plugins' from its own menu into the 'Configure' menu. This menu had a single item, and we can use the space for something else.

-

[Platform/Markdown] In Devblocks, changed the Markdown parsing library from Parsedown to League\CommonMark. Parsedown has some rendering issues and appears to have been abandoned since 2019.

-

[Interactions/Worker] In interaction.worker automations, continuing no longer hides the current step and only hides the submit button. The spinner is displayed in its place. This allows the auto-submit functionality to show progress messages.

-

[Portals/Website] In 'Website Interactions' portals, the popup now sets an explicit text color. Previously, this inherited from the website theme, which caused issues in dark mode.

-

[Workflows/Tutorial] Added a 'Contribute' section to the tutorial workflow 'Welcome' tab. This includes links to help promote Cerb.

-

[Mail/Outgoing] When sending email through an SMTP mail transport, the hostname of the server is now explicitly set. This is used for

HELO/EHLOwhen connecting to an SMTP server. Previously, the default hostname was[127.0.0.1], which is increasingly blocked by email providers like Gmail proxies.